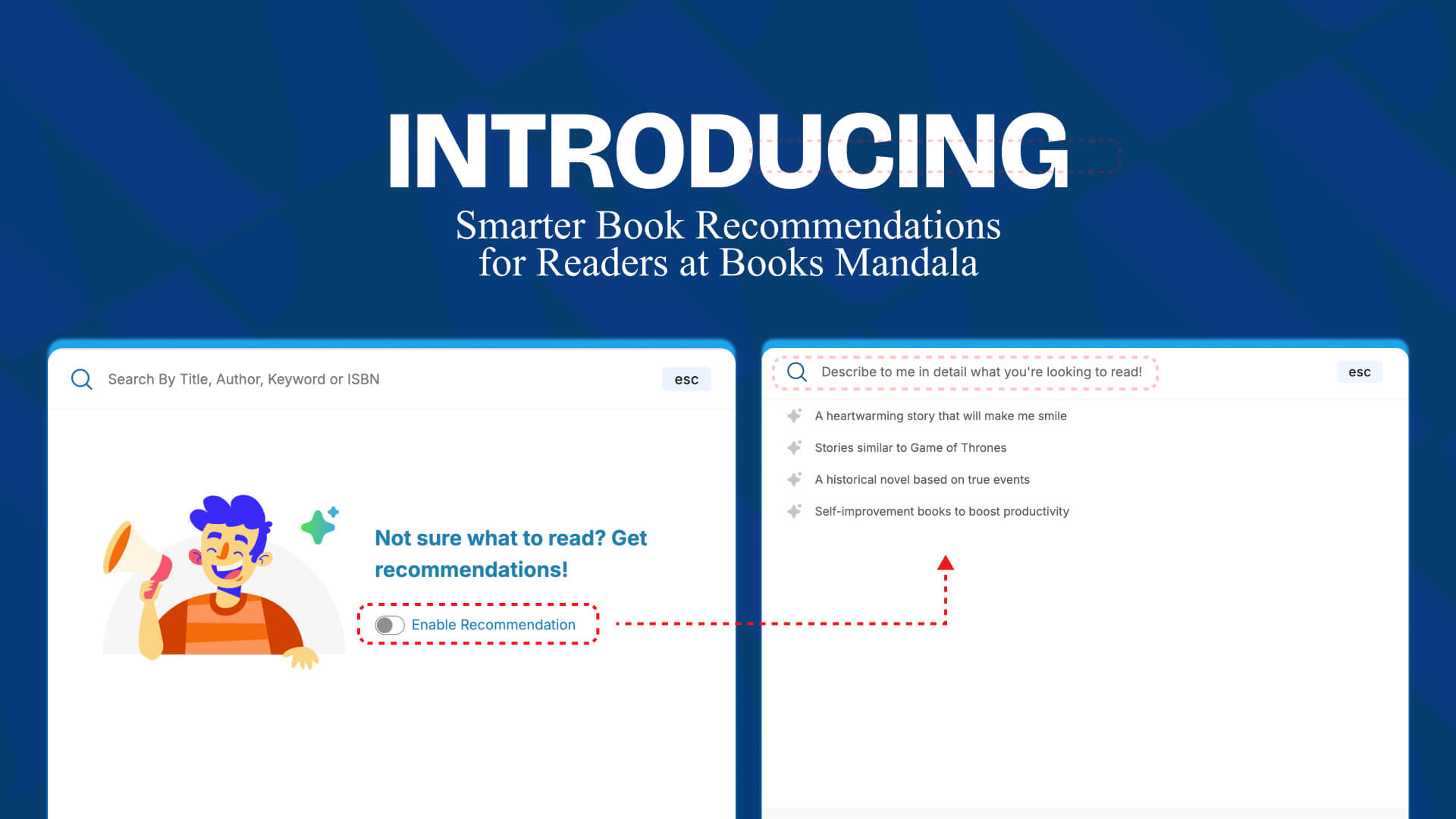

Smarter Book Recommendations for Readers at Books Mandala

At Mandala Tech, we’ve spent years building systems that bring clarity and care to digital experiences in Nepal. One of our longest-standing efforts has been behind booksmandala.com, a platform designed not just to sell books but to help people find the right ones.

Recently, we began integrating a new kind of recommendation engine into the Books Mandala experience. It is now live inside the search modal and represents the first building block of a broader, ongoing project we are developing internally under the name Jubliee AI.

While Jubliee AI is still in active development, this first feature, the real-time recommendation system is already helping readers discover books in a more intuitive, intelligent way.

Why Traditional Book Search Wasn’t Enough

Books Mandala serves tens of thousands of readers across Nepal and beyond. But for years, our search system was built like most others: exact-match, keyword-reliant, and often unforgiving.

If you knew exactly what you were looking for, it worked well. If you searched for “Sapiens” or “Harry Potter Book 3,” you found your book. But if you typed “books for overthinkers” or “feel-good novels after heartbreak,” the system failed to understand your intent. This gap became increasingly visible as more readers came to us through organic search, social media, or recommendations from friends.

We knew we needed something better. Something that didn’t just look for matching text but understood what the reader actually wanted. The new recommendation engine does exactly that it’s detailed at Books Mandala’s website here.

How the Recommendation System Works

We designed the recommendation engine to handle ambiguous, thematic, and emotionally driven search queries. It is trained to analyze natural language input and match it to relevant titles from our live inventory.

Architecture Overview

The system combines several modular components:

1. Recommendation Agent

This is the language model-powered layer that interprets user queries. It expands minimal or vague search inputs like “books about healing” or “adventure stories with depth” into internal representations that capture tone, theme, genre, and emotional arc.

2. Qdrant Vector Search

Every book in our catalog is converted into high-dimensional embeddings using sentence transformers. We store these in a Qdrant vector database, allowing the system to perform fast, scalable semantic similarity searches.

3. FastAPI Backend

The backend infrastructure is built on FastAPI, providing a production-grade API for low-latency interaction with both the Books Mandala frontend and any future tools we plan to integrate, such as a CLI or admin dashboard.

4. BooksMandala Integration Layer

The recommendation engine is currently embedded in the search modal on booksmandala.com. When a user begins typing, the system retrieves and ranks books semantically related to their query. It only shows in-stock books and ignores non-book queries such as “write me a poem.”

What It Does Today

The live version of the recommendation system can already do the following:

- Interpret abstract reader intent and return accurate book suggestions

- Prioritize and filter for titles that are in stock right now

- Bypass irrelevant or invalid queries that do not relate to books

- Run live inside the user-facing search experience, enhancing discoverability

All of this is built without relying on third-party recommendation APIs or black-box models. We control every layer of logic, retrieval, and integration.

What’s Coming Next

While this first phase is live, the full system behind Jubliee AI is still under development. Over the next few months, we plan to expand its capabilities in several key areas we will be revealing soon and as we build we’re keeping the system modular, interpretable, and grounded in real-world usability. We are not interested in vague AI buzzwords. We are interested in helping people find books they will actually love.

Built by a Team That Lives With the Tools

One thing that makes Mandala Tech different is that we don’t just ship features and forget them. We use and maintain them ourselves. The same people who built this recommendation engine are monitoring its performance, improving its outputs, and talking directly to support teams and readers when things fall short.

It is not a plug-in. It is a system built from the ground up, by a team that ships its own code, manages its own infra, and works side-by-side with Books Mandala’s operations.

If you’re interested in seeing it in action, visit booksmandala.com and try searching for something like “uplifting novels after grief” or “books with strong female protagonists.” You may be surprised by what shows up, and by how quickly it helps you find your next read.

And if you’re working on vector search, language-based agents, or recommender systems for high-context domains, we’d love to connect!